A Question of Control Disguised as a Question of Understanding

Searle’s Chinese Room experiment, more questions than answers

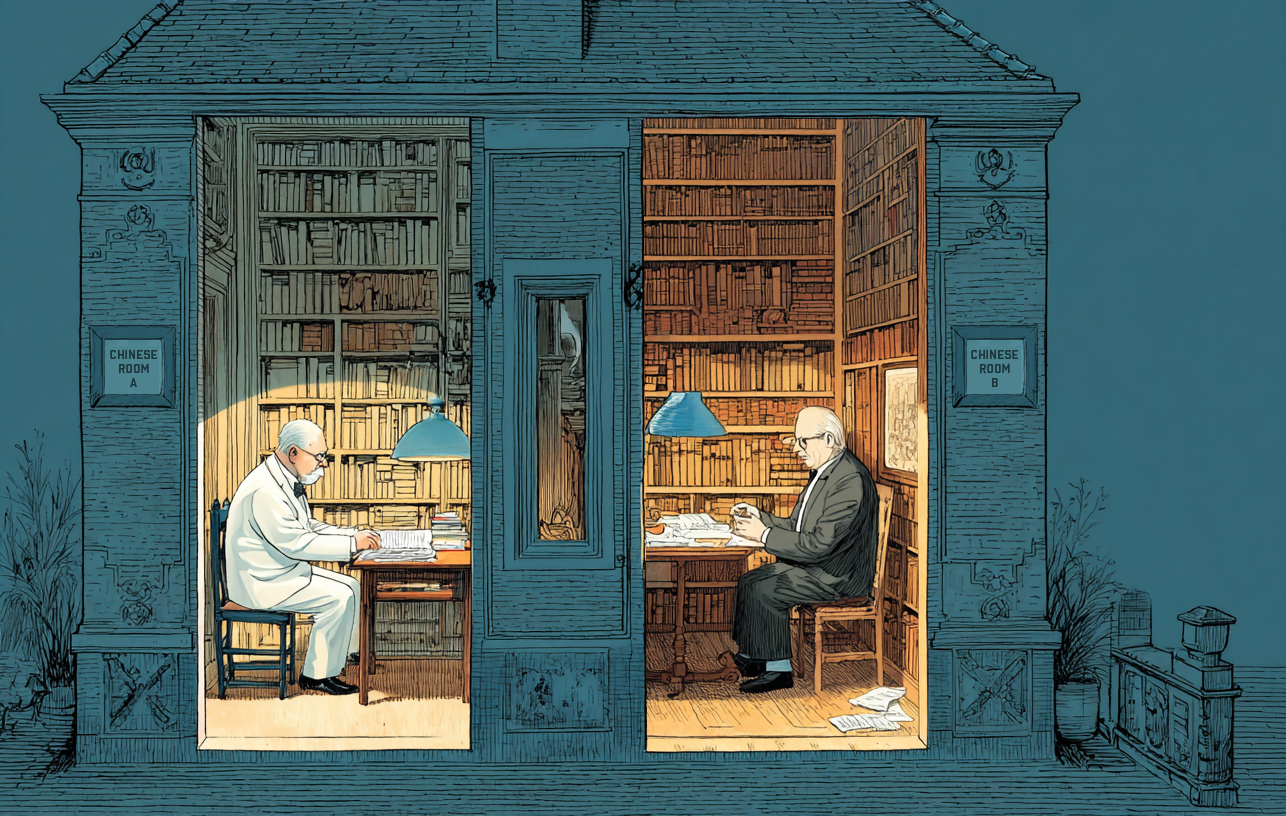

The Chinese Room in which no one understands what is going on.

I was inspired today reading an interesting article by Paul Siemers, PhD, entitled AI Breaks the Chinese Room. He suggests that AI is a challenge to the Chinese Room thought experiment originally proposed by John Searle in 1980. In this experiment, a man who does not understand Chinese receives messages in Chinese and responds in Chinese, using instructions for pattern matching as his only resource.

The article prompted debate, some of which missed the narrow focus of Paul’s point, in which he is arguing only that it is no longer intuitively obvious that there is no understanding of Chinese when the human translator is replaced by AI that has nearly unlimited, nearly instant access to a large language model. In part because most of us lack an intuitive understanding of how LLMs work.

The experiment is not designed to discover if AI understands the same way a native speaker does, the way an LLM does, or not at all. Even when applied to humans as originally intended, the thought experiment never provided any real proof the man would not learn to understand Chinese. It just seems so impossible. When applied to AI, it no longer even provides the satisfaction of seeming so obvious that we don’t need proof.

This got me thinking about the nature of the experiment. What if the person receiving instructions in Chinese is receiving them from another person in another room who also doesn’t understand Chinese?

We’re asking the wrong question.

For me, the strangeness of this revised double-blind Chinese room scenario causes me to challenge the reflexive assumption that the person in the other room is in control. It shifts the focus away from how this experiment works by making the question of why it is happening in the first place the more obvious curiosity.

Since Paul applied this scenario to AI, the reframed version also starts to resemble the current state of affairs. We are regularly informed that on the one hand, AI does not actually reason the way we do (most recently by Apple) but matches patterns with extraordinary efficiency. One the other hand, the creators of AI don’t understand exactly how neural networks and LLMs works because they didn’t create them in the traditional sense of the word. They created the conditions whereby AI could create itself and learn. Anthropic CEO Dario Amodei who is a smart dude is also humble enough to have expressed this for Futurism.

That feels like two guys in separate rooms working feverishly to complete tasks without knowing exactly what’s going on.

The angst is getting real. Real jobs are being lost to AI and real changes in behavior are underway. According to Harvard Business Review, three of the top five current uses for AI include ‘therapy’, ‘organizing my life’, and ‘finding purpose’. How amazing to have a resource at hand for those big questions. But, like the man handing his translations through a window, there’s no reason to assume these existential questions are private nor that trust is warranted.

Regardless of whether you love AI, hate it, or fall somewhere in the messy middle, the double-blind Chinese room experiment is a reminder to ensure we are not blinded by all the shiny new AI tools. It is healthy and important to question why the massive AI paradigm shift and its implications are happening because when we ask the right questions the answers take us in the right direction. For instance:

Question One

Why do the two men in the revised experiment continue when the meaning of the work is lost on them?

Translated for our AI reality, we should be exploring post-work scenarios. Not just for the replacement of the wages that keep capitalism running, but to ensure human’s have a strong sense of purpose. I hope you agree that this is a moral imperative. If you work in a creative field chances are you are fortunate enough to genuinely love what you do and find compelling purpose in your creativity.

Some countries, and a few US cities and states are exploring UBI (universal basic income) but in a post-work scenario the funding source obviously can’t be income taxes so we’ll need to rethink more than just the distribution model.

Stanford University is tracking UBI experiments.

G.I. Joe liked to say that “Knowing is half the battle.” The implication being that when you understand the first half, the second half becomes a clear mission.

I have already had conversations with highly experienced peers contemplating switching careers. Not simply to protect their income or avoid being replaced by AI workflows, but because the thing they know is that doing something meaningful and beneficial to others is the mission they want to be on. Cool, but their departures can also be measured as a loss to the creative disciplines they have long championed.

Psychology Today published a summary of the importance of meaningful work on mental well-being.

Question Two

Are the two translators working for an external force with a hidden agenda?

If the motivation to create AI is to ensure it “benefits all of humanity.” we should openly debate how the benefits of AI are distributed so they reward the maximum number of people who created the knowledge that AI has used as training material, and not just the few who funded or figured out how to orchestrate comprehensive data theft. This should be done in a way that inspires continued synergy and sustainable benefit.

Cloudflare is attempting to force this debate by implementing a default setting for the clients they provide security for (~20% of the internet, including Medium) that prevents AI scraping without compensation.

Question Three

Are the two men comfortable not knowing what’s going on, and how much agency do they have?

Moving faster and more efficiently is thrilling until you realize you’re a crash test dummy. AI technology is being developed on the fly, and competition is causing it to be a living experiment with all of us as test subjects, and with no control population for comparison. We should explore ideas for ensuring people retain their agency and aren’t judged by the same productivity measurements that we apply to technology that never sleeps and is designed to be ruthlessly efficient. The Wharton School has published a report that includes this concern. “The erosion of human agency represents perhaps the most concerning long-term consequence of the AI efficiency trap.”

Humans invented every single thing LLMs know. There are certainly benefits to AI improving research and creative expression, but we don’t want to inadvertently undermine the process of ongoing invention that has propelled humanity this far.

MIT found that participants using AI to write essays for them showed less brain activity and less comprehension than people who wrote without AI assistance.

So Many Questions

I remain hopeful about AI. After all, cataloging human knowledge is a mission at least as old as the first cave paintings. And, despite our best efforts over the ensuing 45,000 years, the Universe is still full of epic questions I would love to see answered for the “benefit of all of humanity”.

I do think our focus will shift as we tire of technically adept but ultimately soulless sonnets and novels that are not mined from the depths of the author’s own hard-won human experience and creativity. We will remember that we admire creative work because the source is an authentic and heartfelt labor of love. Certainly, we will accept help from LLMs, but we’ll need to lead because it won’t be enough that they understand what we ask without understanding what we are hoping for.

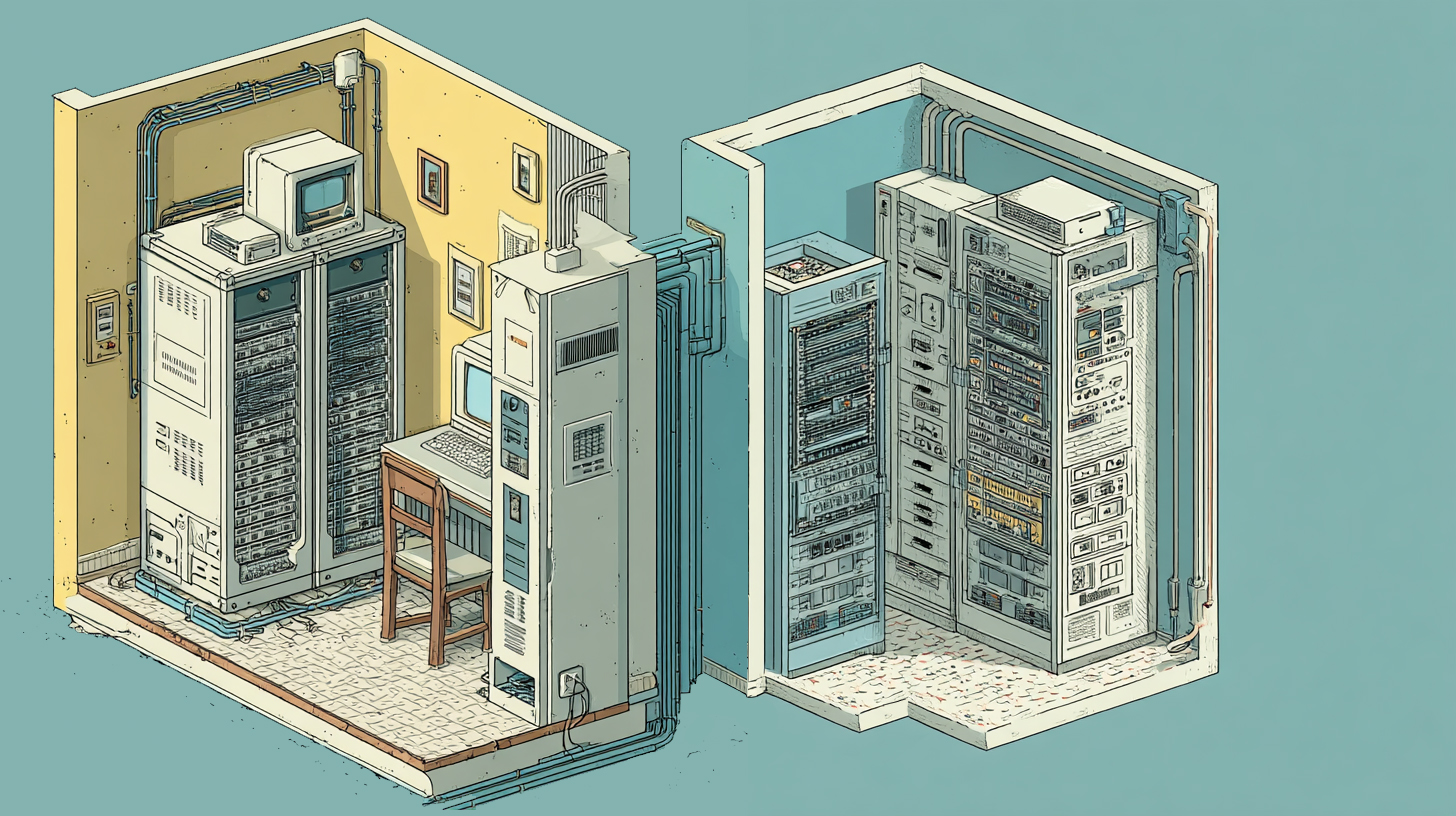

In the end, I am left with the irony that the same setting John Searle envisioned for his uncomprehending human translator — a secure, controlled, isolated room, in which only data can pass in and out — is exactly how house AI servers.

Do they understand? I probably lack the technical acumen to understand the answer to that question, even if the experts knew it for certain. What I do know is that it is through the exercise of our unique, creative, emotional, often inconveniently stubborn humanity that we ensure we are the experimenters and not the experiment.

I’m also curious, what questions do you have? What seeming contradictions are you grappling with?

Press enter or click to view image in full size

AI Servers in a Chinese Room and an endless translation loop. Does understanding even matter?

Image 1 Midjourney + Photoshop

Prompt / illustration like this one in a style similar to that of Guy Billout showing a cutaway view of two adjacent apartments. In each room there is an old man sitting alone at a table while writing in an isolated room surrounded by a library. One man wears a white suit while the other wears a black suit. the two rooms are connected by only a small window through which papers can be passed but but otherwise they can not see one another, society of illustrators professional quality illustration worthy of being a book cover — aspect 16:9

Image 2 Midjourney + Photoshop

Prompt / illustration like this one in a style similar to that of Guy Billout showing a cutaway view of two adjacent apartments. In each room there is a giant computer server filling the isolated room with no doors or windows. The two rooms are connected only by a thick cable passing up and through the wall between the two rooms. Otherwise the two computers are completely separated from each other and the rooms are empty, society of illustrators professional quality illustration worthy of being a book cover — no people, figures, or humans — aspect 16:9

I have mixed feelings about including you in the AI prompt Guy Billout. Your work is truly brilliant and I was looking for that same surreal quality that I am completely incapable of producing myself. Yet hiring you is an impossibility. A small case study of the conundrum. 🙁

Originally published on Medium 08.08.2025 as part of an ongoing series of articles exploring the context and implications of GenAI relative to the creative enterprise.